iOS readers: For the best reading experience, we recommend using a bright or light theme. Dark themes (such as night mode) are not compatible with the book's graphics.

We live in an age of exponential growth in knowledge, and it is increasingly futile to teach only polished theorems and proofs. We must abandon the guided tour through the art gallery of mathematics, and instead teach how to create the mathematics we need. In my opinion, there is no long-term practical alternative.

—Richard Hamming

Foreword

Like many electrical engineers, I grew up in a world shaped by Richard Hamming. My graduate thesis was based on coding theory; my early career on digital filters and numerical methods. I loved these subjects, took them for granted, and had no idea how they came to be.

I got my first hint upon encountering “You and Your Research,” Hamming’s electrifying sermon on why some scientists do great work, why most don’t, why he did, and why you should too. Most thrilling was Hamming’s vivid image of greatness, and its unapologetic pursuit. Not only was it allowable to aim for greatness, it was cowardly not to. It was the most inspirational thing I’d ever read.

The Art of Doing Science and Engineering is the full, beautiful expression of what “You and Your Research” sketched in outline. In this delightfully earnest parody of a textbook, chapters on “Digital Filters” and “Error-Correcting Codes” do not, in fact, teach those things at all, but rather exist to teach the style of thinking by which these great ideas were conceived.

This is a book about thinking. One cannot talk about thinking in the abstract, at least not usefully. But one can talk about thinking about digital filters, and by studying how great scientists thought about digital filters, one learns, however gradually, to think like a great scientist.

Among the most remarkable attributes of Hamming’s style of thinking is its sweeping range of scale. At the micro level, we see the close, deliberate examination of everyone and everything, from the choice of complex exponentials as basis functions to the number of jokes in an after-dinner speech. Nothing is taken for granted or left unquestioned, but is picked up and turned over curiously, intently, searching for

And then, even in the same sentence, a zoom out to the wide shot, the subject contextualized against the broad scientific landscape, a link in the long chain of history. Just as nothing is taken for granted, nothing is taken in isolation.

For one accustomed to the myopia of day-to-day work in a field, so jammed against the swaggering parade of passing trends that one can hardly see beyond them or beneath them, such shifts in viewpoint are exhilarating—a reminder that information may be abundant but wisdom is rare.

After all, where today can the serious student of scientific creativity observe the master at work, short of apprenticeship? Histories are written for spectators; textbooks teach tools, not craft. We can gather small hints from the loose reflections of Hadamard, the lofty theories of Koestler, the tactical heuristics of Pólya and Altshuller.

But Hamming stands alone. Certainly for the thoroughness with which he presents his style of thinking, and more so for explicitly identifying style as a thing that exists and can be learned in the first place. But most of all, for his expectation—his insistence—that the reader is destined to join him in extending the arc of history, to become a great person who does great work. In this tour of scientific greatness, the reader is not a passenger, but a driver in training.

This book is filled with great people doing great work, and to successfully appreciate Hamming’s expectations, it is valuable to consider exactly what he meant by “great people,” and by “great work.”

* * *

In Hamming’s world, great people do and the rest do not. Hamming’s heroes assume almost mythical stature, swashbuckling across the scientific frontier, generating history in their wake. And yet, it is well-known that science is a fundamentally social enterprise, advanced by the intermingling ideas and cumulative contributions of an untold mass of names which, if not lost to the ages, are at least absent from Wikipedia.

Hamming’s own career reflects this contradiction. He was employed essentially as a kind of internal mathematical consultant; he spent his days helping other people with their problems, often problems of a practical and mundane nature. Rather than begrudging this work, he saw it as the “interaction with harsh reality” necessary to keep his head out of the clouds, and at best, the continuous production of “micro-Nobel Prizes.” And most critically, all of his “great” work, his many celebrated inventions, grew directly out of these problems he was solving for other people.

Throughout, Hamming insisted on an open door, lunched with anyone he could learn from or argue with, stormed in and out of colleagues’ offices, and otherwise made indisputable the social dimensions of advancing a field.

Hamming’s conviction—indeed, obsession—was the opposite: that this greatness was less a matter of genius (or divinity), and more a kind of virtuosity. He saw these undeniably great figures as human beings that had learned how to do something, and by studying them, he could learn it too.

And he did, or so his narrative goes: against a background of colleagues more talented, more knowledgable, more supported, better equipped along every axis, Hamming was the one to invent the inventions, found the fields, win the awards, and generally transcend his times.

And if he could, then so could anyone. Hamming was always as much a teacher as a scientist, and having spent a lifetime forming and confirming a theory of great people, he felt he could prepare the next generation for even greater greatness. That’s the premise and promise of this book.

Hamming-greatness is thus more a practice than a trait. This book is full of great people performing mighty deeds, but they are not here to be admired. They are to be aspired to, learned from, and surpassed.

* * *

Hamming leaves the definition of “great work” open, encouraging the reader to “pick your goals of excellence” and “strive for greatness as you see it.” Despite this apparent generosity, the sort of work that Hamming considered “great” had a distinct shape, and tracing that shape reveals the deepest message of the book.

There are many commonalities we can admire in these endeavors: the dazzling leap of imagination, the broad scope of applicability, the founding of a new paradigm. But let’s focus here on their form of distribution. These are all things that are taught. To “use” them means to learn them, understand them, internalize them, perform them with one’s own hands. They are free to any open mind.

In Hamming’s world, great achievements are gifts of knowledge to humanity.

Looking at this work in retrospect, it can be hard to imagine it having taken any other form. It’s possible to see these past gifts as inevitable, without realizing how much the present-day winds have shifted. Many of the bright young students who, in Hamming’s day, would have pursued a doctorate and a research career, today find themselves pursued by venture capital. Steve Jobs has replaced Einstein as cultural icon, and the brass ring is no longer the otherworldly Nobel Prize, but the billion-dollar acquisition. Universities are dominated by “technology transfer” activities, and engineering professors—even some in the sciences—are looked at askance if they aren’t running a couple startups on the side.

One can imagine the encouraged path, the inevitable-seeming path, for a present-day inventor of error-correcting codes: error-correcting.com, encoding as a service, all ingenuity hidden behind an api. Bait to be swallowed by some hulking multinational. Hamming wrote books.

It’s crucial to emphasize that the “great work” Hamming extols had no entrepreneurial component whatsoever. Gifts to humanity—to be learned by minds, not downloaded to phones.

As we read Hamming’s reminiscences of lunching with Shockley, Brattain, and Bardeen—lauded today as “inventors of the transistor”—let us keep in mind how they viewed themselves: “discoverers” of “transistor action.” A phenomenon, not a product; to be described, not owned and rented out. Their Nobel Prize reads “discovery of the transistor effect.” Hamming’s account lingers on the prize and is silent on the patents.

This book is adapted from a course that Hamming taught at the U.S. Naval Postgraduate School, to cohorts of “carefully selected navy, marine, army, air force, and coast guard students with very few civilians.” In a recording of the introductory lecture, we hear Hamming tell his students:

The Navy is paying a large sum of money to have you here, and it wants its money back by your later performance.

The United States Navy does not want its money back with an ipo. Regardless of one’s orientation toward military engineering, there is no question that these students are expected and expecting to serve the public interest.

Hamming-greatness is tied, inseparably, with the conception of science and engineering as public service. This school of thought is not extinct today, but it is rare, and doing such work is not impossible, but fights a nearly overwhelming current.

Yet, to Hamming, bad conditions are no excuse for bad work. You will find in these pages ample motivation to use your one life to do great work, work that transcends its times, regardless of the conditions you find yourself in.

As you proceed, I invite you to study not just Hamming’s techniques for achieving greatness, but the specific kind of greatness he achieved. I invite you to be inspired not just by Hamming’s success, but by his gifts to humanity, among the highest of which is surely this book itself.

Bret Victor

Cambridge, Massachusetts

December 2018

The Art of Doing Science and Engineering: Learning to Learn

© 2020 Stripe Press

CRC Press edition published 1996

Stripe Press edition published 2020

All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording or any other information storage and retrieval system, without prior permission in writing from the publisher.

Mathematics proofreading for this edition by Dave Hinterman

Published in the United States of America by Stripe Press / Stripe Matter Inc.

Stripe Press

Ideas for progress

San Francisco, California

Printed by Hemlock in Canada

ebook design by Bright Wing Media

ISBN: 978-1-7322651-7-2

Fourth Edition

Preface

After many years of pressure and encouragement from friends, I decided to write up the graduate course in engineering I teach at the U.S. Naval Postgraduate School in Monterey, California. At first I concentrated on all the details I thought should be tightened up, rather than leave the material as a series of somewhat disconnected lectures. In class the lectures often followed the interests of the students, and many of the later lectures were suggested topics in which they expressed an interest. Also, the lectures changed from year to year as various areas developed. Since engineering depends so heavily these days on the corresponding sciences, I often use the terms interchangeably.

After more thought I decided that since I was trying to teach “

Since my classes are almost all carefully selected Navy, Marine, Army, Air Force, and Coast Guard students with very few civilians, and, interestingly enough, about 15 percent very highly selected foreign military, the students face a highly technical future—hence the importance of preparing them for their future and not just our past.

This course is mainly personal experiences I have had and digested, at least to some extent. Naturally one tends to remember one’s successes and forget lesser events, but I recount a number of my spectacular failures as clear examples of what to avoid. I have found that the personal story is far, far more effective than the impersonal one; hence there is necessarily an aura of “bragging” in the book that is unavoidable.

Let me repeat what I earlier indicated. Apparently an “art”—which almost by definition cannot be put into words—is probably best communicated by approaching it from many sides and doing so repeatedly, hoping thereby students will finally master enough of the art, or if you wish, style, to significantly increase their future contributions to society. A totally different description of the course is: it covers all kinds of things that could not find their proper place in the standard curriculum.

The casual reader should not be put off by the mathematics; it is only “window dressing” used to illustrate and connect up with earlier learned material. Usually the underlying ideas can be grasped from the words alone.

It is customary to thank various people and institutions for help in producing a book. Thanks obviously go to AT&T Bell Laboratories, Murray Hill, New Jersey, and to the U.S. Naval Postgraduate School, especially the Department of Electrical and Computer Engineering, for making this book possible.

Introduction

This book is concerned more with the future and less with the past of science and engineering. Of course future predictions are uncertain and usually based on the past; but the past is also much more uncertain—or even falsely reported—than is usually recognized. Thus we are forced to imagine what the future will probably be. This course has been called “Hamming on Hamming” since it draws heavily on my own past experiences, observations, and wide reading.

There is a great deal of mathematics in the early part because almost surely the future of science and engineering will be more mathematical than the past, and also I need to establish the nature of the foundations of our beliefs and their uncertainties. Only then can I show the weaknesses of our current beliefs and indicate future directions to be considered.

If you find the mathematics difficult, skip those early parts. Later sections will be understandable provided you are willing to forgo the deep insights mathematics gives into the weaknesses of our current beliefs. General results are always stated in words, so the content will still be there but in a slightly diluted form.

1 Orientation

The course is concerned with “style,” and almost by definition style cannot be taught in the normal manner by using words. I can only approach the topic through particular examples, which I hope are well within your grasp, though the examples come mainly from my 30 years in the mathematics department of the Research Division of Bell Telephone Laboratories (before it was broken up). It also comes from years of study of the work of others.

I have found to be effective in this course, I must use mainly firsthand knowledge, which implies I break a standard taboo and talk about myself in the first person, instead of the traditional impersonal way of science. You must forgive me in this matter, as there seems to be no other approach which will be as effective. If I do not use direct experience, then the material will probably sound to you like merely pious words and have little impact on your minds, and it is your minds I must change if I am to be effective.

This talking about first-person experiences will give a flavor of “bragging,” though I include a number of my serious errors to partially balance things. Vicarious learning from the experiences of others saves making errors yourself, but I regard the study of successes as being basically more important than the study of failures. As I will several times say, there are so many ways of being wrong and so few of being right, studying successes is more efficient, and furthermore when your turn comes you will know how to succeed rather than how to fail!

I am, as it were, only a coach. I cannot run the mile for you; at best I can discuss styles and criticize yours. You know you must run the mile if the athletics course is to be of benefit to you—hence you must think carefully about what you hear or read in this book if it is to be effective in changing you—which must obviously be the purpose of any course. Again, you will get out of this course only as much as you put in, and if you put in little effort beyond sitting in the class or reading the book, then it is simply a waste of your time. You must also mull things over, compare what I say with your own experiences, talk with others, and make some of the points part of your way of doing things.

Since the subject matter is “style,” I will use the comparison with teaching painting. Having learned the fundamentals of painting, you then study under a master you accept as being a great painter; but you know you must forge your own style out of the elements of various earlier painters plus your native abilities. You must also adapt your style to fit the future, since merely copying the past will not be enough if you aspire to future greatness—a matter I assume, and will talk about often in the book. I will show you my style as best I can, but, again, you must take those elements of it which seem to fit you, and you must finally create your own style. Either you will be a leader or a follower, and my goal is for you to be a leader. You cannot adopt every trait I discuss in what I have observed in myself and others; you must select and adapt, and make them your own if the course is to be effective.

Even more difficult than what to select is that what is a successful style in one age may not be appropriate to the next age! My predecessors at Bell Telephone Laboratories used one style; four of us who came in all at about the same time, and had about the same chronological age, found our own styles, and as a result we rather completely transformed the overall style of the mathematics department, as well as many parts of the whole Laboratories. We privately called ourselves “the four young Turks,” and many years later I found top management had called us the same!

I return to the topic of education. You all recognize there is a significant difference between education and training.

Education is what, when, and why to do things.

Training is how to do it.

and the amount of knowledge produced annually has a constant k of proportionality to the number of scientists alive. Assuming we begin at minus infinity in time (the error is small and you can adjust it to Newton’s time if you wish), we have the formula

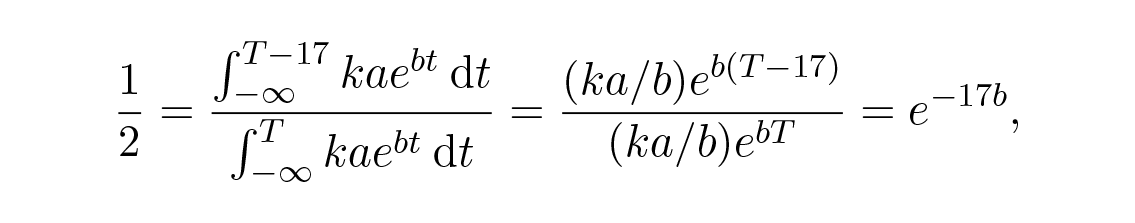

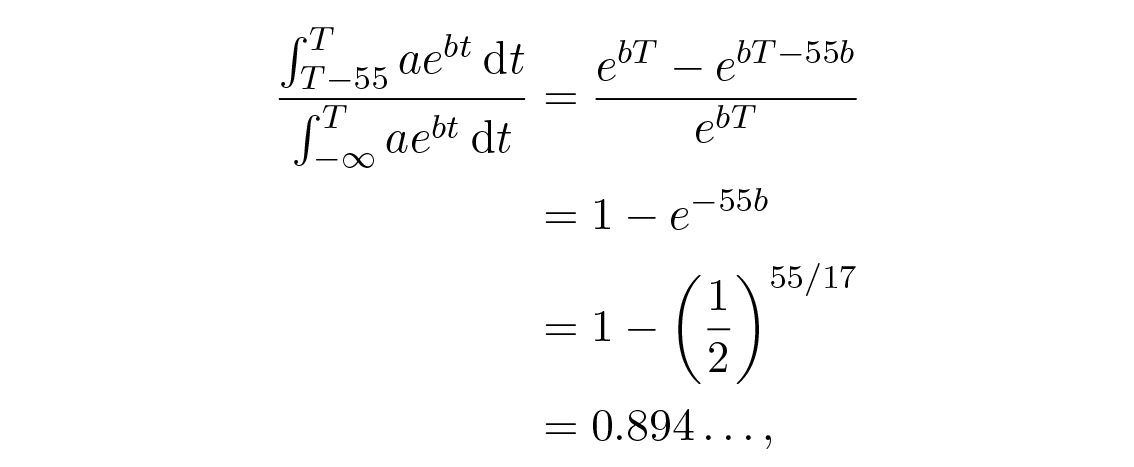

hence we know b. Now to the other statement. If we allow the lifetime of a scientist to be 55 years (it seems likely that the statement meant living and not practicing, but excluding childhood) then we have

which is very close to 90%.

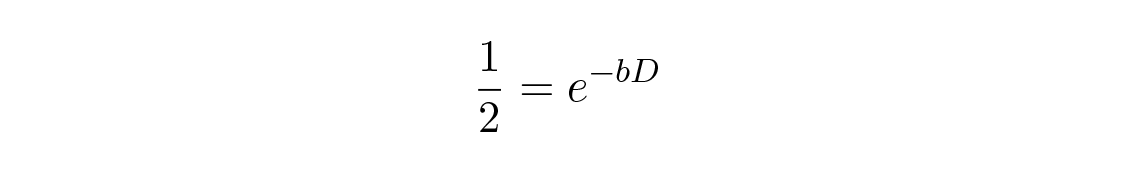

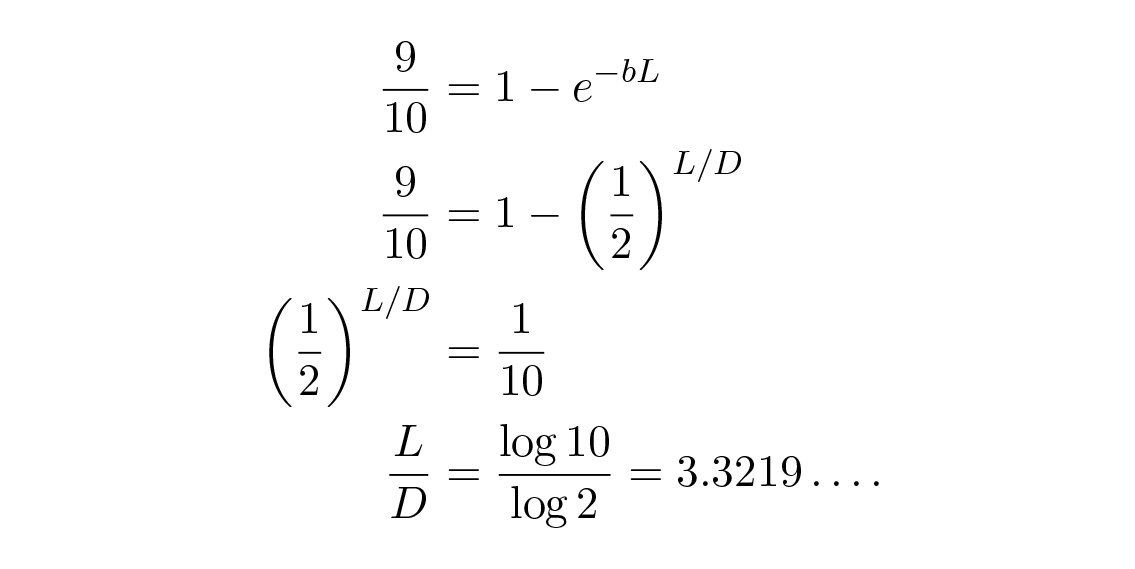

Typically the first back-of-the-envelope calculations use, as we did, definite numbers where one has a feel for things, and then we repeat the calculations with parameters so you can adjust things to fit the data better and understand the general case. Let the doubling period be D and the lifetime of a scientist be L. The first equation now becomes

and the second becomes

With D = 17 years we have 17 × 3.3219 = 56.47… years for the lifetime of a scientist, which is close to the 55 we assumed. We can play with the ratio of L/D until we find a slightly closer fit to the data (which was approximate, though I believe more in the 17 years for doubling than I do in the 90%). Back-of-the-envelope computing indicates the two remarks are reasonably compatible. Notice the relationship applies for all time so long as the assumed simple relationships hold.

Added to the problem of the growth of new knowledge is the obsolescence of old knowledge. It is claimed by many the half-life of the technical knowledge you just learned in school is about 15 years—in 15 years half of it will be obsolete (either we will have gone in other directions or will have replaced it with new material). For example, having taught myself a bit about vacuum tubes (because at Bell Telephone Laboratories they were at that time obviously important) I soon found myself helping, in the form of computing, the development of transistors—which obsoleted my just-learned knowledge!

To bring the meaning of this doubling down to your own life, suppose you have a child when you are x years old. That child will face, when it is in college, about y times the amount you faced.

| y | x |

|---|---|

| Factor of increase | Years |

| 2 | 17 |

| 3 | 27 |

| 4 | 34 |

| 5 | 39 |

| 6 | 44 |

| 7 | 48 |

| 8 | 51 |

This doubling is not just in theorems of mathematics and technical results, but in musical recordings of Beethoven’s Ninth, of where to go skiing, of tv programs to watch or not to watch. If you were at times awed by the mass of knowledge you faced when you went to college, or even now, think of your children’s troubles when they are there! The technical knowledge involved in your life will quadruple in 34 years, and many of you will then be near the high point of your career. Pick your estimated years to retirement and then look in the left-hand column for the probable factor of increase over the present current knowledge when you finally quit!

I need to discuss science vs. engineering. Put glibly:

In science, if you know what you are doing, you should not be doing it.

In engineering, if you do not know what you are doing, you should not be doing it.

Of course, you seldom, if ever, see either pure state. All of engineering involves some creativity to cover the parts not known, and almost all of science includes some practical engineering to translate the abstractions into practice. Much of present science rests on engineering tools, and as time goes on, engineering seems to involve more and more of the science part. Many of the large scientific projects involve very serious engineering problems—the two fields are growing together! Among other reasons for this situation is almost surely that we are going forward at an accelerated pace, and now there is not time to allow us the leisure which comes from separating the two fields. Furthermore, both the science and the engineering you will need for your future will more and more often be created after you left school. Sorry! But you will simply have to actively master on your own the many new emerging fields as they arise, without having the luxury of being passively taught.

It should be noted that engineering is not just applied science, which is a distinct third field (though it is not often recognized as such) which lies between science and engineering.

I read somewhere there are 76 different methods of predicting the future—but the very number suggests there is no reliable method which is widely accepted. The most trivial method is to predict tomorrow will be exactly the same as today—which at times is a good bet. The next level of sophistication is to use the current rates of change and to suppose they will stay the same—linear prediction in the variable used. Which variable you use can, of course, strongly affect the prediction made! Both methods are not much good for long-term predictions, however.

The past was once the future and the future will become the past.

In any case, I will often use history as a background for the extrapolations I make. I believe the best predictions are based on understanding the fundamental forces involved, and this is what I depend on mainly. Often it is not physical limitations which control but rather it is human-made laws, habits, and organizational rules, regulations, personal egos, and inertia which dominate the evolution to the future. You have not been trained along these lines as much as I believe you should have been, and hence I must be careful to include them whenever the topics arise.

There is a saying, “Short-term predictions are always optimistic and long-term predictions are always pessimistic.” The reason, so it is claimed, the second part is true is that for most people the geometric growth due to the compounding of knowledge is hard to grasp. For example, for money, a mere 6% annual growth doubles the money in about 12 years! In 48 years the growth is a factor of 16. An example of the truth of this claim that most long-term predictions are low is the growth of the computer field in speed, in density of components, in drop in price, etc., as well as the spread of computers into the many corners of life. But the field of artificial intelligence (ai) provides a very good counterexample. Almost all the leaders in the field made long-term predictions which have almost never come true, and are not likely to do so within your lifetime, though many will in the fullness of time.

- History is seldom reported at all accurately, and I have found no two reports of what happened atLos Alamos during wwii seem to agree.

- Due to the pace of progress the future is rather disconnected from the past; the presence of the modern computer is an example of the great differences which have arisen.

Reading some historians you get the impression the past was determined by big trends, but you also have the feeling the future has great possibilities. You can handle this apparent contradiction in at least four ways:

- You can simply ignore it.

- You can admit it.

- You can decide the past was a lot less determined than historians usually indicate, and individual choices can make large differences at times. Alexander the Great, Napoleon, and Hitler had great effects on the physical side of life, while Pythagoras,Plato,Aristotle,Newton, Maxwell, andEinstein are examples on the mental side.

- You can decide the future is less open-ended than you would like to believe, and there is really less choice than there appears to be.

It is probable the future will be more limited by the slow evolution of the human animal and the corresponding human laws, social institutions, and organizations than it will be by the rapid evolution of technology.

In spite of the difficulty of predicting the future and that

unforeseen technological inventions can completely upset the most careful predictions,

you must try to foresee the future you will face. To illustrate the importance of this point of trying to foresee the future I often use a standard story.

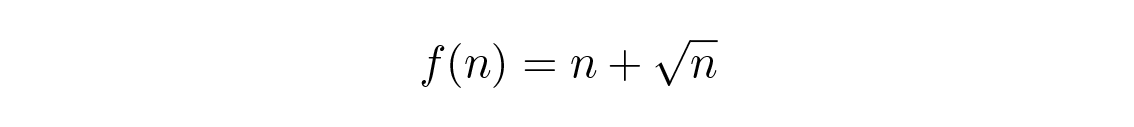

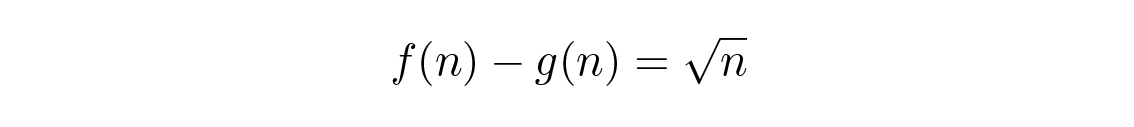

steps from the origin. But if there is a pretty girl in one direction, then his steps will tend to go in that direction and he will go a distance proportional to n. In a lifetime of many, many independent choices, small and large, a career with a vision will get you a distance proportional to n, while no vision will get you only the distance

steps from the origin. But if there is a pretty girl in one direction, then his steps will tend to go in that direction and he will go a distance proportional to n. In a lifetime of many, many independent choices, small and large, a career with a vision will get you a distance proportional to n, while no vision will get you only the distance  . In a sense, the main difference between those who go far and those who do not is some people have a vision and the others do not and therefore can only react to the current events as they happen.

. In a sense, the main difference between those who go far and those who do not is some people have a vision and the others do not and therefore can only react to the current events as they happen.No vision, not much of a future.

To what extent history does or does not repeat itself is a moot question. But it is one of the few guides you have, hence history will often play a large role in my discussions—I am trying to provide you with some perspective as a possible guide to create your vision of your future. The other main tool I have used is an active imagination in trying to see what will happen. For many years I devoted about 10% of my time (Friday afternoons) to trying to understand what would happen in the future of computing, both as a scientific tool and as a shaper of the social world of work and play. In forming your plan for your future you need to distinguish three different questions:

What is possible?

What is likely to happen?

What is desirable to have happen?

In a sense the first is science—what is possible. The second is engineering—what are the human factors which choose the one future that does happen from the ensemble of all possible futures. The third is ethics, morals, or whatever other word you wish to apply to value judgments. It is important to examine all three questions, and insofar as the second differs from the third, you will probably have an idea of how to alter things to make the more desirable future occur, rather than let the inevitable happen and suffer the consequences. Again, you can see why having a vision is what tends to separate the leaders from the followers.

The standard process of organizing knowledge by departments, and sub-departments, and further breaking it up into separate courses, tends to conceal the homogeneity of knowledge, and at the same time to omit much which falls between the courses. The optimization of the individual courses in turn means a lot of important things in engineering practice are skipped since they do not appear to be essential to any one course. One of the functions of this book is to mention and illustrate many of these missed topics which are important in the practice of science and engineering. Another goal of the course is to show the essential unity of all knowledge rather than the fragments which appear as the individual topics are taught. In your future anything and everything you know might be useful, but if you believe the problem is in one area you are not apt to use information that is relevant but which occurred in another course.

| Economics | Far cheaper, and getting more so |

| Speed | Far, far faster |

| Accuracy | Far more accurate (precise) |

| Reliability | Far ahead (many have error correction built into them) |

| Rapidity of control | Many current airplanes are unstable and require rapid computer control to make them practical |

| Freedom from boredom | An overwhelming advantage |

| Bandwidth in and out | Again overwhelming |

| Ease of retraining | Change programs, not unlearn and then learn the new thing, consuming hours and hours of human time and effort |

| Hostile environments | Outer space, underwater, high-radiation fields, warfare, manufacturing situations that are unhealthful, etc. |

| Personnel problems | They tend to dominate management of humans but not of machines; with machines there are no pensions, personal squabbles, unions, personal leave, egos, deaths of relatives, recreation, etc. |

I need not list the advantages of humans over computers—almost every one of you has already objected to this list and has in your mind started to cite the advantages on the other side.

The unexamined life is not worth living.

2 Foundations of the digital (discrete) revolution

We are approaching the end of the revolution of going from signaling with continuous signals to signaling with discrete pulses, and we are now probably moving from using pulses to using

Why has this revolution happened?

- In continuous signaling (transmission) you often have to amplify the signal to compensate for natural losses along the way. Any error made at one stage, before or during amplification, is naturally amplified by the next stage. For example, the telephone company, in sending a voice across the continent, might have a total amplification factor of 10120. At first 10120 seems to be very large, so we do a quickback-of-the-envelope modeling to see if it is reasonable. Consider the system in more detail. Suppose each amplifier has a gain of 100, and they are spaced every 50 miles. The actual path of the signal may well be over 3,000 miles, hence some 60 amplifiers, hence the above factor does seem reasonable now that we have seen how it can arise. It should be evident such amplifiers had to be built with exquisite accuracy if the system was to be suitable for human use.

Compare this to discrete signaling. At each stage we do not amplify the signal, but rather we use the incoming pulse to gate, or not, a standard source of pulses; we actually use repeaters, not amplifiers. Noise introduced at one spot, if not too much to make the pulse detection wrong at the next repeater, is automatically removed. Thus with remarkable fidelity we can transmit a voice signal if we use digital signaling, and furthermore the equipment need not be built extremely accurately. We can use, if necessary, error-detecting and error-correcting codes to further defeat the noise. We will examine these codes later, Chapters 10–12. Along with this we have developed the area of digital filters, which are often much more versatile, compact, and cheaper than are analog filters, Chapters 14–17. We should note here transmission through space (typically signaling) is the same as transmission through time (storage).

Digital computers can take advantage of these features and carry out very deep and accurate computations which are beyond the reach of analog computation. Analog computers have probably passed their peak of importance, but should not be dismissed lightly. They have some features which, so long as great accuracy or deep computations are not required, make them ideal in some situations.

The invention and development of transistors and integrated circuits, ics, has greatly helped the digital revolution. Before ics the problem of soldered joints dominated the building of a large computer, and ics did away with most of this problem, though soldered joints are still troublesome. Furthermore, the high density of components in an ic means lower cost and higher speeds of computing (the parts must be close to each other since otherwise the time of transmission of signals will significantly slow down the speed of computation). The steady decrease of both the voltage and current levels has contributed to the partial solving of heat dissipation.

It was estimated in 1992 thatinterconnection costs were approximately as follows.Interconnection on the chip $10–5 = 0.001 cent Interchip $10–2 = 1 cent Interboard $10–1 = 10 cents Interframe $100 = 100 cents Society is steadily moving from a material goods society to an information service society. At the time of the American Revolution, say 1780 or so, over 90% of the people were essentially farmers—now farmers are a very small percentage of workers. Similarly, before wwii most workers were in factories—now less than half are there. In 1993, there were more people in government (excluding the military) than there were in manufacturing! What will the situation be in 2020? As a guess I would say less than 25% of the people in the civilian workforce will be handling things; the rest will be handling information in some form or other. In making a movie or a tv program you are making not so much a thing, though of course it does have a material form, as you are organizing information. Information is, of course, stored in a material form, say a book (the essence of a book is information), but information is not a material good to be consumed like food, a house, clothes, an automobile, or an airplane ride for transportation.

The information revolution arises from the above three items plus their synergistic interaction, though the following items also contribute.

- The computers make it possible forrobots to do many things, including much of the present manufacturing. Evidently computers will play a dominant role in robot operation, though one must be careful not to claim the standardvon Neumann type of computer will be the sole control mechanism; rather, probably the current neural net computers, fuzzy set logic, and variations will do much of the control. Setting aside the child’s view of a robot as a machine resembling a human, but rather thinking of it as a device for handling and controlling things in the material world, robots used in manufacturing do the following:

- Produce a better product under tighter control limits.

- Produce usually a cheaper product.

- Produce a different product.

This last point needs careful emphasis.

When we first passed from hand accounting to machine accounting we found it necessary, for economical reasons if no other, to somewhat alter the accounting system. Similarly, when we passed from strict hand fabrication to machine fabrication we passed from mainly screws and bolts to rivets and welding.

It has rarely proved practical to produce exactly the same product by machines as we produced by hand.

Indeed, one of the major items in the conversion from hand to machine production is the imaginative redesign of an equivalent product. Thus in thinking of mechanizing a large organization, it won’t work if you try to keep things in detail exactly the same, rather there must be a larger give and take if there is to be a significant success. You must get the essentials of the job in mind and then design the mechanization to do that job rather than trying to mechanize the current version—if you want a significant success in the long run.

I need to stress this point: mechanization requires you produce an equivalent product, not identically the same one. Furthermore, in any design it is now essential to consider field maintenance since in the long run it often dominates all other costs. The more complex the designed system, the more field maintenance must be central to the final design. Only when field maintenance is part of the original design can it be safely controlled; it is not wise to try to graft it on later. This applies to both mechanical things and to human organizations.

- The effects of computers on science have been very large, and will probably continue as time goes on. My first experience in large-scale computing was in the design of the originalatomic bomb atLos Alamos. There was no possibility of a small-scale experiment—either you have a critical mass or you do not—and hence computing seemed at that time to be the only practical approach. We simulated, on primitive ibm accounting machines, various proposed designs, and they gradually came down to a design to test in the desert at Alamogordo, New Mexico.

From that one experience, on thinking it over carefully and what it meant, I realized computers would allow the simulation of many different kinds of experiments. I put that vision into practice at Bell Telephone Laboratories for many years. Somewhere in the mid to late 1950s, in an address to the President and vps of Bell Telephone Laboratories, I said, “At present we are doing one out of ten experiments on the computers and nine in the labs, but before I leave it will be nine out of ten on the machines.” They did not believe me then, as they were sure real observations were the key to experiments and I was just a wild theoretician from the mathematics department, but you all realize by now we do somewhere between 90% to 99% of our experiments on the machines and the rest in the labs. And this trend will go on! It is so much cheaper to do simulations than real experiments, so much more flexible in testing, and we can even do things which cannot be done in any lab, so it is inevitable the trend will continue for some time. Again, the product was changed!

But you were all taught about the evils of the Middle Age scholasticism—people deciding what would happen by reading in the books ofAristotle (384–322 bc) rather than looking at nature. This wasGalileo’s (1564–1642) great point, which started the modern scientific revolution—look at nature, not in books! But what was I saying above? We are now looking more and more in books and less and less at nature! There is clearly a risk we will go too far occasionally—and I expect this will happen frequently in the future. We must not forget, in all the enthusiasm for computer simulations, occasionally we must look at nature as she is. Computers have also greatly affected engineering. Not only can we design and build far more complex things than we could by hand, we can explore many more alternate designs. We also now use computers to control situations, such as on the modern high-speed airplane, where we build unstable designs and then use high-speed detection and computers to stabilize them since the unaided pilot simply cannot fly them directly. Similarly, we can now do unstable experiments in the laboratories using a fast computer to control the instability. The result will be that the experiment will measure something very accurately right on the edge of stability.

As noted above, engineering is coming closer to science, and hence the role of simulation in unexplored situations is rapidly increasing in engineering as well as science. It is also true computers are now often an essential component of a good design.

In the past engineering has been dominated to a great extent by “what can we do,” but now “what do we want to do” looms greater since we now have the power to design almost anything we want. More than ever before, engineering is a matter of choice and balance rather than just doing what can be done. And more and more it is the human factors which will determine good design—a topic which needs your serious attention at all times.

The effects on society are also large. The most obvious illustration is that computers have given top management the power to micromanage their organization, and top management has shown little or no ability to resist using this power. You can regularly read in the papers some big corporation is decentralizing, but when you follow it for several years you see they merely intended to do so, but did not.

Among other evils ofmicromanagement is lower management does not get the chance to make responsible decisions andlearn from their mistakes, but rather, because the older people finally retire, then lower management finds itself as top management—without having had many real experiences in management!Furthermore, central planning has been repeatedly shown to give poor results (consider the Russian experiment, for example, or our own bureaucracy). The persons on the spot usually have better knowledge than can those at the top and hence can often (not always) make better decisions if things are not micromanaged. The people at the bottom do not have the larger, global view, but at the top they do not have the local view of all the details, many of which can often be very important, so either extreme gets poor results.

Next, an idea which arises in the field, based on the direct experience of the people doing the job, cannot get going in a centrally controlled system since the managers did not think of it themselves. The not invented here (nih) syndrome is one of the major curses of our society, and computers, with their ability to encourage micromanagement, are a significant factor.

There is slowly, but apparently definitely, coming a counter trend to micromanagement. Loose connections between small, somewhat independent organizations are gradually arising. Thus in the brokerage business one company has set itself up to sell its services to other small subscribers, for example computer and legal services. This leaves the brokerage decisions of their customers to the local management people who are close to the front line of activity. Similarly, in the pharmaceutical area, some loosely related companies carry out their work and trade among themselves as they see fit. I believe you can expect to see much more of this loose association between small organizations as a defense against micromanagement from the top, which occurs so often in big organizations. There has always been some independence of subdivisions in organizations, but the power to micromanage from the top has apparently destroyed the conventional lines and autonomy of decision making—and I doubt the ability of most top managements to resist for long the power to micromanage. I also doubt many large companies will be able to give up micromanagement; most will probably be replaced in the long run by smaller organizations without the cost (overhead) and errors of top management. Thus computers are affecting the very structure of how society does its business, and for the moment apparently for the worse in this area.

Computers have already invaded the entertainment field. An informal survey indicates the average American spends far more time watching tv than eating—again an information field is taking precedence over the vital material field of eating! Many commercials and some programs are now either partially or completely computer produced.

How far machines will go in changing society is a matter of speculation—which opens doors to topics that would cause trouble if discussed openly! Hence I must leave it to your imaginations as to what, using computers on chips, can be done in such areas as sex, marriage, sports, games, “travel in the comforts of home via virtual realities,” and other human activities.

Computers began mainly in the field of number crunching but passed rapidly on to information retrieval (say airline reservation systems); word processing, which is spreading everywhere; symbol manipulation, as is done by many programs, such as those which can do analytic integration in the calculus far better and cheaper than can the students; and in logical and decision areas, where many companies use such programs to control their operations from moment to moment. The future computer invasion of traditional fields remains to be seen and will be discussed later under the heading of Artificial Intelligence (ai), Chapters 6–8.

- In the military it is easy to observe (in the Gulf War, for example) the central role of information, and the failure to use the information about one’s own situation killed many of our own people! Clearly that war was one of information above all else, and it is probably one indicator of the future. I need not tell you such things since you are all aware, or should be, of this trend. It is up to you to try to foresee the situation in the year 2020 when you are at the peak of your careers. I believe computers will be almost everywhere, since I once saw a sign which read, “The battlefield is no place for the human being.” Similarly for situations requiring constant decision making. The manyadvantages of machines over humans were listed near the end of the last chapter, and it is hard to get around these advantages, though they are certainly not everything. Clearly the role of humans will be quite different from what it has traditionally been, but many of you will insist on old theories you were taught long ago as if they would be automatically true in the long future. It will be the same in business: much of what is now taught is based on the past, and has ignored the computer revolution and our responses to some of the evils the revolution has brought; the gains are generally clear to management, the evils are less so.How much the trends, predicted in part 6 above, toward and away from micromanagement will apply widely is again a topic best left to you—but you will be a fool if you do not give it your deep and constant attention. I suggest you must rethink everything you ever learned on the subject, question every successful doctrine from the past, and finally decide for yourself its future applicability. TheBuddha told his disciples, “Believe nothing, no matter where you read it, or who said it, no matter if I have said it, unless it agrees with your own reason and your own common sense.” I say the same to you—you must assume the responsibility for what you believe.

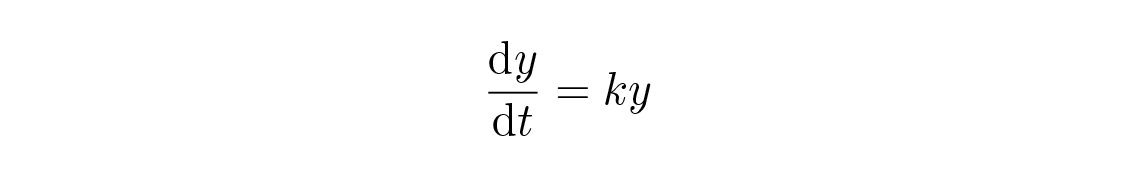

The simplest model of growth assumes the rate of growth is proportional to the current size—something like compound interest, unrestrained bacterial and human population growth, as well as many other examples. The corresponding differential equation is

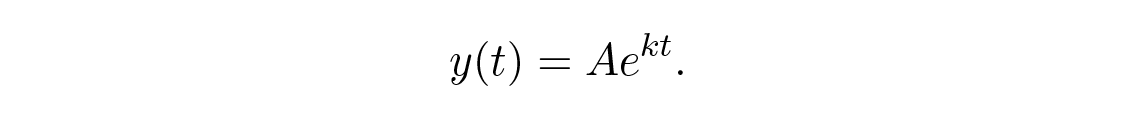

whose solution is, of course,

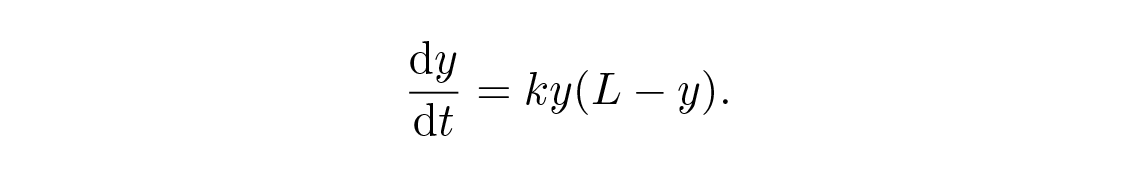

But this growth is unlimited and all things must have limits, even knowledge itself, since it must be recorded in some form and we are (currently) told the universe is finite! Hence we must include a limiting factor in the differential equation. Let L be the upper limit. Then the next simplest growth equation seems to be

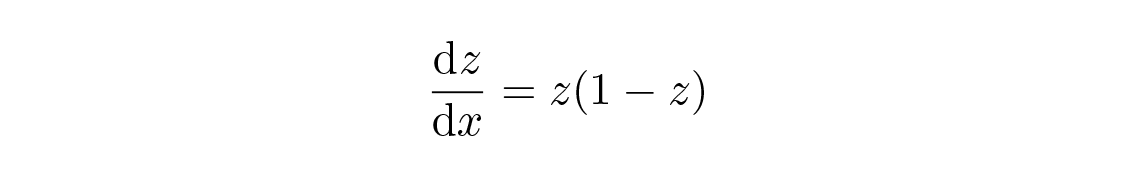

At this point we of course reduce it to a standard form that eliminates the constants. Set y = Lz and x = kLt, then we have

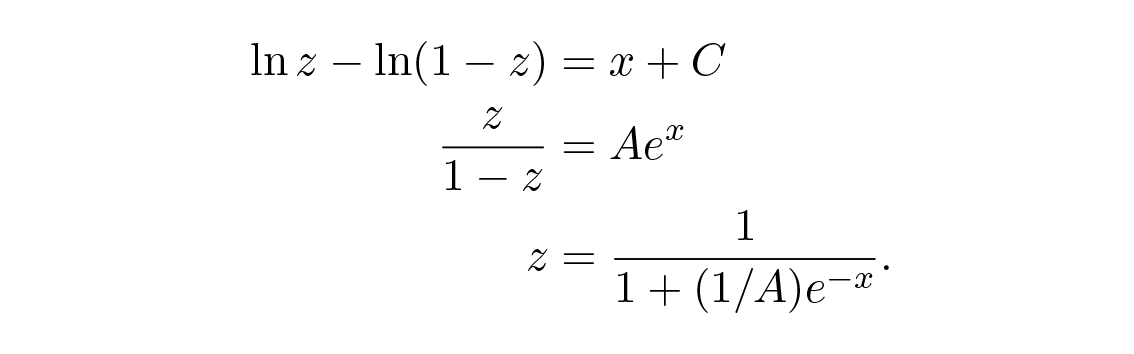

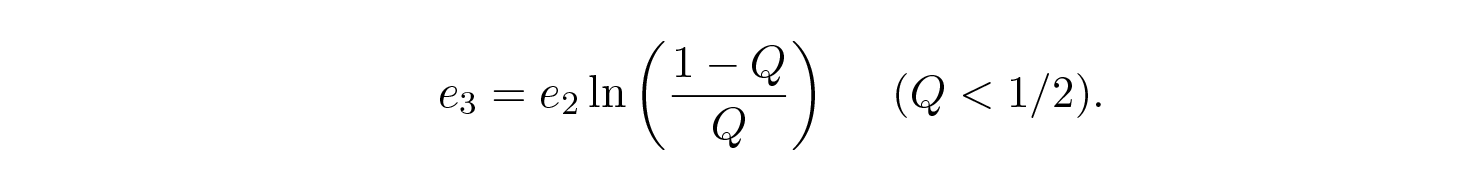

as the reduced form for the growth problem, where the saturation level is now 1. Separation of variables plus partial fractions yields

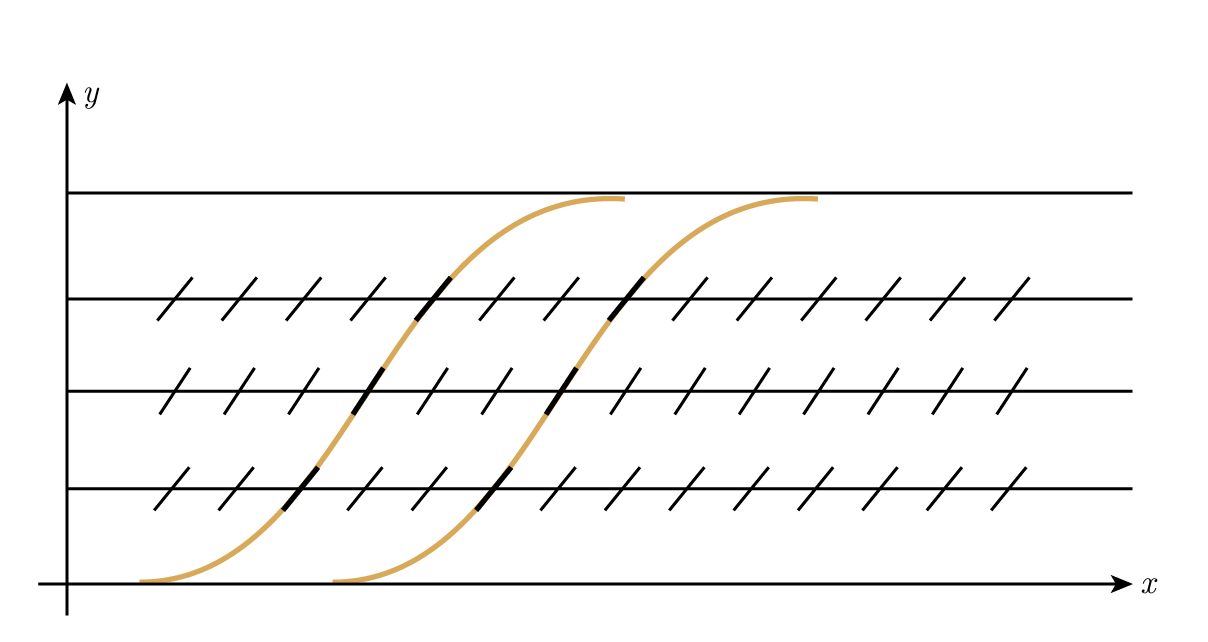

A is, of course, determined by the initial conditions, where you put t (or x) = 0. You see immediately the S shape of the curve: at t = −∞, z = 0; at t = 0, z = A/(A + 1); and at t = + ∞, z = 1.

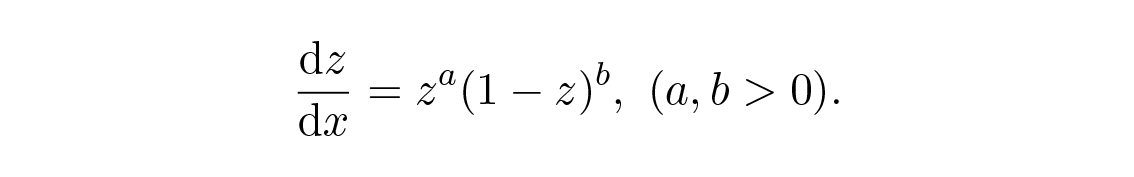

A more flexible model for the growth is (in the reduced variables)

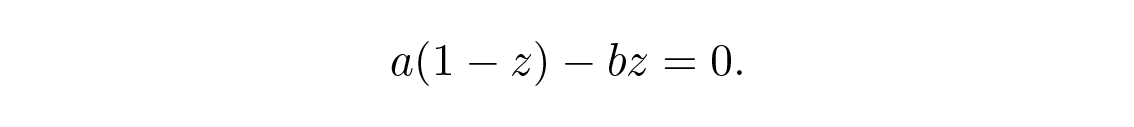

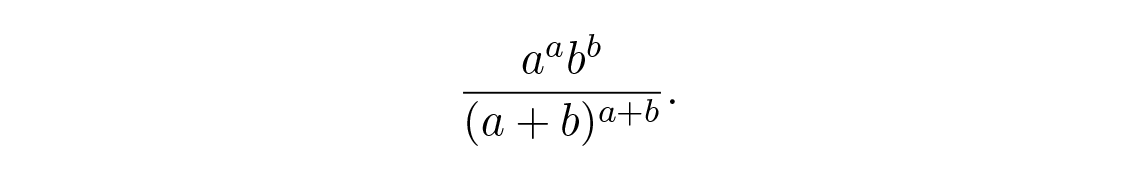

This is again a separable equation, and also yields to numerical integration if you wish. We can analytically find the steepest slope by differentiating the right-hand side and equating to 0, getting

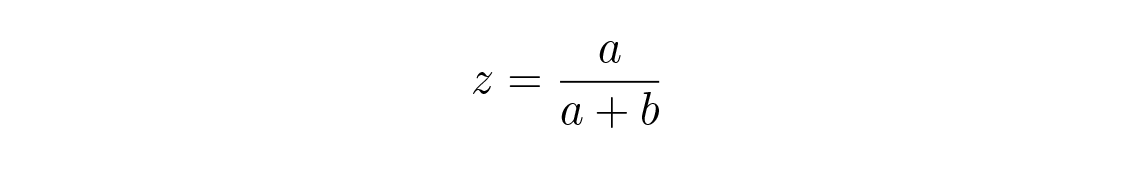

Hence at the place

we have the maximum slope

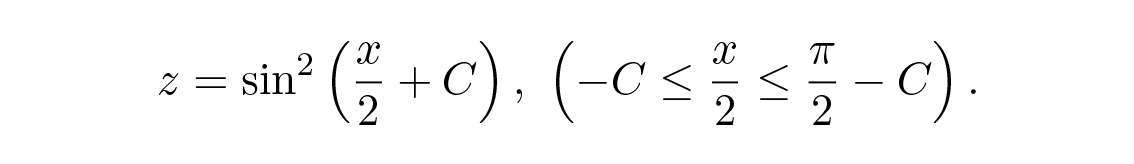

In the special case of a = b we have maximum slope = 2–2a. The curve will in this case be odd symmetric about the point where z = 1/2. In the further special case of a = b = 1/2 we get the solution

Here we see the solution curve has a finite range. For larger exponents a and b we have clearly an infinite range.

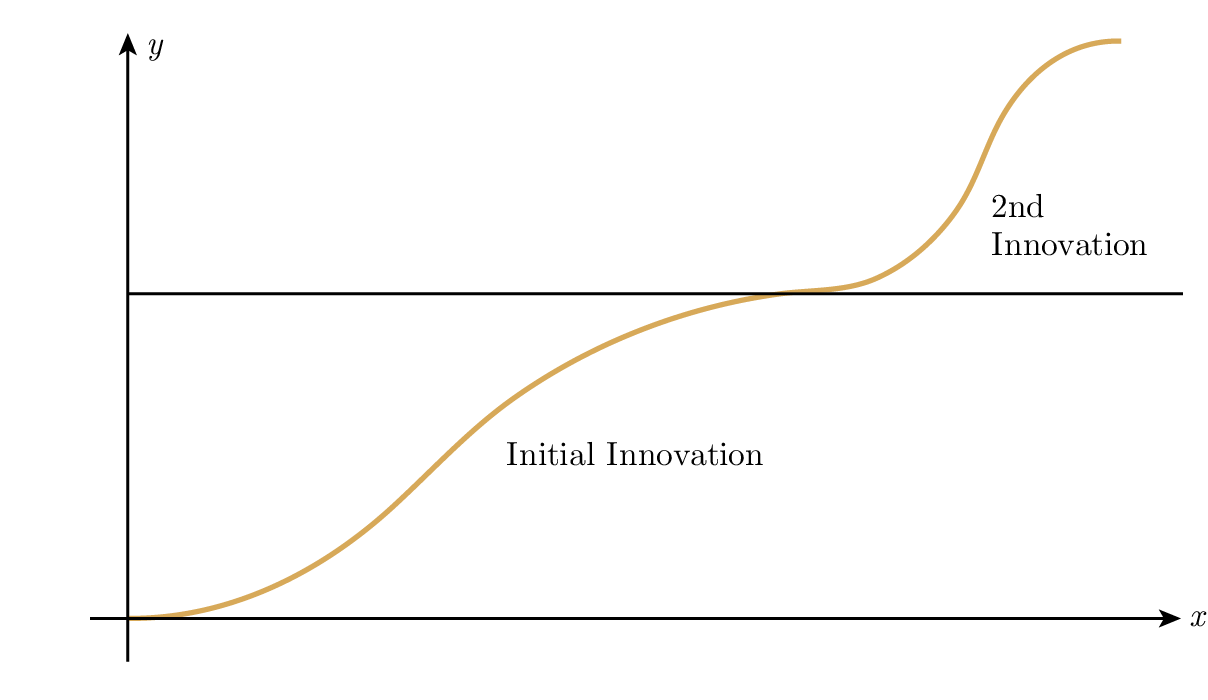

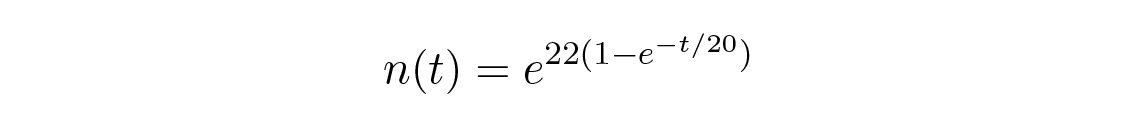

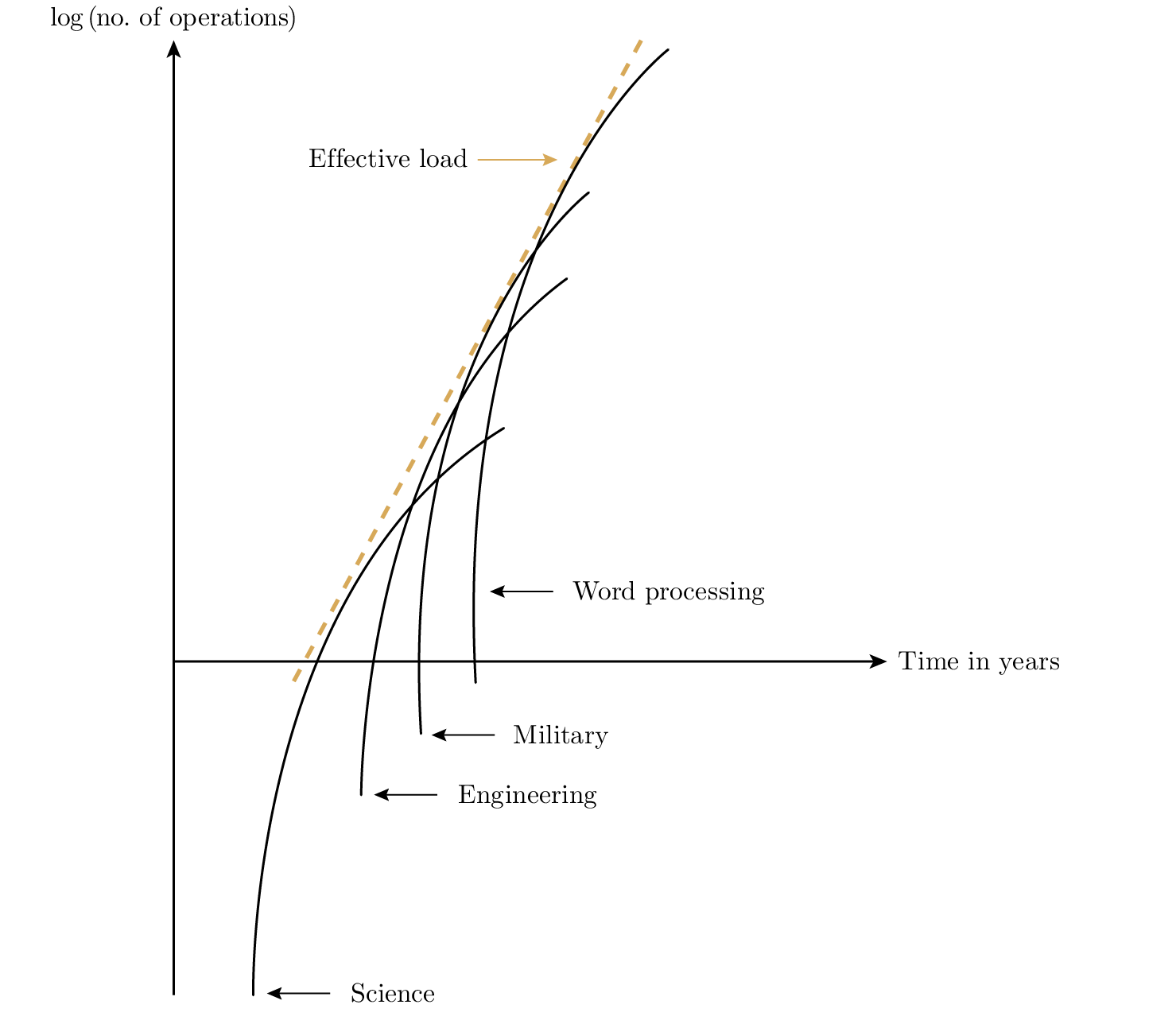

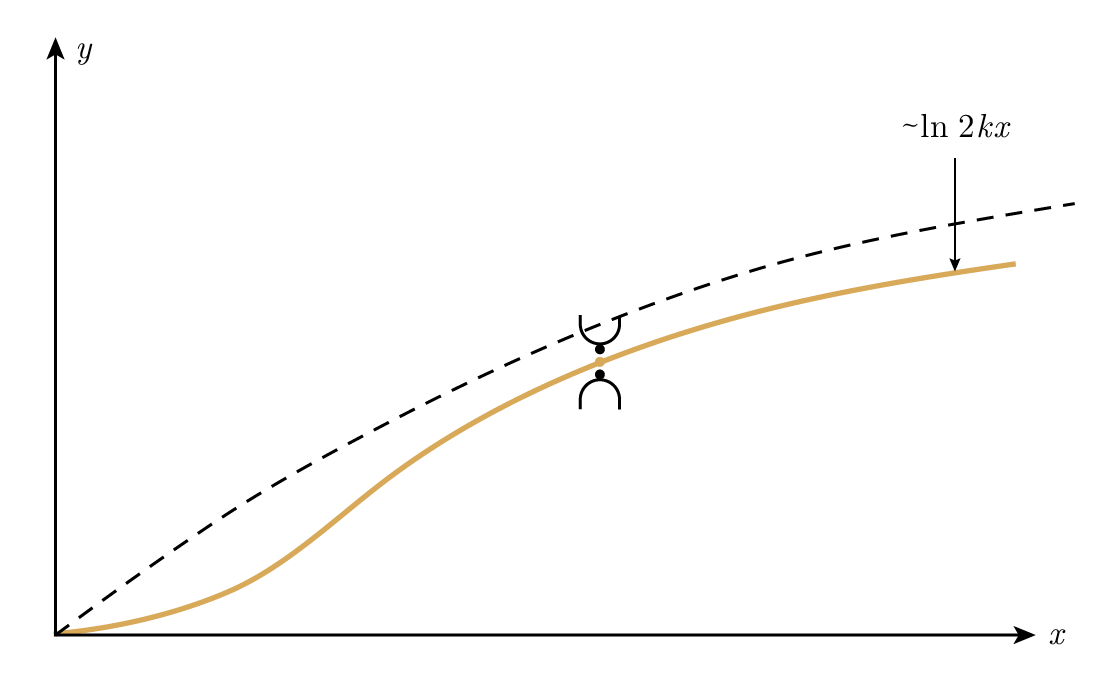

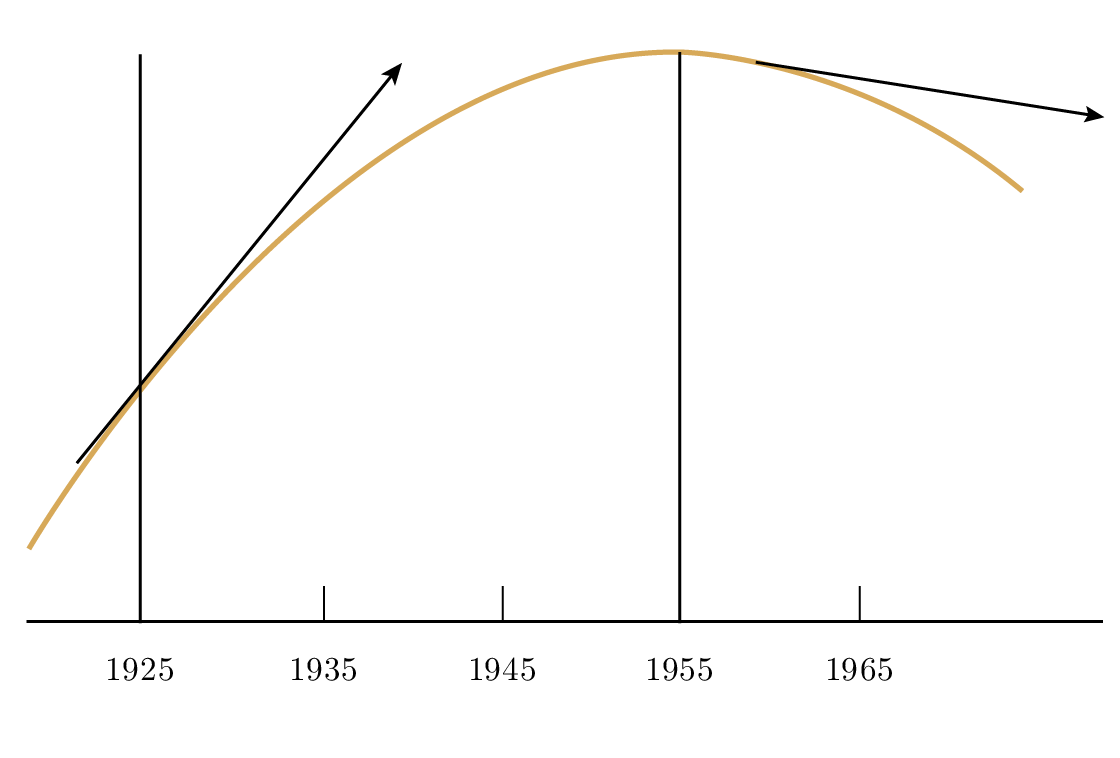

Again we see how a simple model, while not very exact in detail, suggests the nature of the situation. Whether parallel processing fits into this picture or is an independent curve is not clear at this moment. Often a new innovation will set the growth of a field onto a new S curve which takes off from around the saturation level of the old one, Figure 2.2. You may want to explore models which do not have a hard upper saturation limit but rather finally grow logarithmically; they are sometimes more appropriate.

It is evident electrical engineering in the future is going to be, to a large extent, a matter of (1) selecting chips off the shelf or from a catalog, (2) putting the chips together in a suitable manner to get what you want, and (3) writing the corresponding programs. Awareness of the chips and circuit boards which are currently available will be an essential part of engineering, much as the Vacuum Tube Catalog was in the old days.

- Other users of the chip will help find the errors, or other weaknesses, if there are any.

- Other users will help write the manuals needed to use it.

- Other users, including the manufacturer, will suggest upgrades of the chip, hence you can expect a steady stream of improved chips with little or no effort on your part.

- Inventory will not be a serious problem.

- Since, as I have repeatedly said, technical progress is going on at an increasing rate, it follows technological obsolescence will be much more rapid in the future than it is now. You will hardly get a system installed and working before there are significant improvements which you can adapt by mere program changes if you have used general-purpose chips and good programming methods rather than your special-purpose chip, which will almost certainly tie you down to your first design.

Hence beware of special-purpose chips! Though many times they are essential.

3 History of computers—hardware

The history of computing probably began with primitive man using pebbles to compute the sum of two amounts. Marshack (of Harvard) found that what had been believed to be mere scratches on old bones from caveman days were in fact carefully scribed lines apparently connected with the moon’s phases. The famous

The sand pan and the abacus are instruments more closely connected with computing, and the arrival of the Arabic numerals from India meant a great step forward in the area of pure computing. Great resistance to the adoption of the Arabic numerals (not in their original Arabic form) was encountered from officialdom, even to the extent of making them illegal, but in time (the 1400s) the practicalities and economic advantages triumphed over the more clumsy Roman (and earlier Greek) use of letters of the alphabet as symbols for the numbers.

The invention of logarithms by Napier (1550–1617) was the next great step. From it came the slide rule, which has the numbers on the parts as lengths proportional to the logs of the numbers, hence adding two lengths means multiplying the two numbers. This analog device, the slide rule, was another significant step forward, but in the area of analog, not digital, computers. I once used a very elaborate slide rule in the form of a 6–8-inch diameter cylinder about two feet long, with many, many suitable scales on both the outer and inner cylinders, and equipped with a magnifying glass to make the reading of the scales more accurate.

Slide rules in the 1930s and 1940s were standard equipment of the engineer, usually carried in a leather case fastened to the belt as a badge of one’s group on the campus. The standard engineer’s slide rule was a “ten-inch log log decitrig slide rule,” meaning the scales were ten inches long, included log log scales, square and cubing scales, as well as numerous trigonometric scales in decimal parts of the degree. They are no longer manufactured!

During wwii the electronic analog computers came into the military field use. They used condensers as integrators in place of the earlier mechanical wheels and balls (hence they could only integrate with respect to time). They meant a large, practical step forward, and I used one such machine at Bell Telephone Laboratories for many years. It was constructed from parts of some old m9 gun directors. Indeed, we used parts of some later condemned m9s to build a second computer to be used either independently or with the first one to expand its capacity to do larger problems.

Returning to digital computing, Napier also designed “Napier’s bones,” which were typically ivory rods with numbers which enabled one to multiply numbers easily; these are digital and not to be confused with the analog slide rule.

The next major practical stage was the comptometer, which was merely an adding device, but by repeated additions, along with shifting, this is equivalent to multiplication, and was very widely used for many, many years.

From this came a sequence of more modern desk calculators, the Millionaire, then the Marchant, the Friden, and the Monroe. At first they were hand controlled and hand powered, but gradually some of the control was built in, mainly by mechanical levers. Beginning around 1937 they gradually acquired electric motors to do much of the power part of the computing. Before 1944 at least one had the operation of square root incorporated into the machine (still mechanical levers intricately organized). Such hand machines were the basis of computing groups of people running them to provide computing power. For example, when I came to the Bell Telephone Laboratories in 1946 there were four such groups in the Labs, typically about six to ten girls in a group: a small group in the mathematics department, a larger one in network department, one in switching, and one in quality control.

Let me turn to some comparisons:

| Hand calculators | 1/20 ops. per sec. |

| Relay machines | 1 op. per sec. typically |

| Magnetic drum machines | 15–1,000, depending somewhat on fixed or floating point |

| 701 type | 1,000 ops. per sec. |

| Current (1990) | 109 (around the fastest of the von Neumann type) |

The changes in speed, and corresponding storage capacities, that I have had to live through should give you some idea as to what you will have to endure in your careers. Even for von Neumann-type machines there is probably another factor of speed of around 100 before reaching the saturation speed.

Since such numbers are actually beyond most human experience, I need to introduce a human dimension to the speeds you will hear about. First, notation (the parentheses contain the standard symbol):

| milli (m) | 10–3 | kilo (k) | 103 |

| micro (µ) | 10–6 | mega (M) | 106 |

| nano (n) | 10–9 | giga (G) | 109 |

| pico (p) | 10–12 | terra (T) | 1012 |

| femto (f) | 10–15 | ||

| atto (a) | 10–18 |

Now to the human dimensions. In one day there are 60 × 60 × 24 = 86,400 seconds. In one year there are close to 3.15 × 107 seconds, and in 100 years, probably greater than your lifetime, there are about 3.15 × 109 seconds. Thus in three seconds a machine doing 109 floating point operations per second (flops) will do more operations than there are seconds in your whole lifetime, and almost certainly get them all correct!

For another approach to human dimensions, the velocity of light in a vacuum is about 3 × 1010 cm/sec (along a wire it is about 7/10 as fast). Thus in a nanosecond light goes 30 cm, about one foot. At a picosecond the distance is, of course, about 1/100 of an inch. These represent the distances a signal can go (at best) in an ic. Thus at some of the pulse rates we now use the parts must be very close to each other—close in human dimensions—or else much of the potential speed will be lost in going between parts. Also we can no longer used lumped circuit analysis.

How about natural dimensions of length instead of human dimensions? Well, atoms come in various sizes, running generally around 1 to 3 angstroms (an angstrom is 10-8 cm) and in a crystal are spaced around 10 angstroms apart, typically, though there are exceptions. In 1 femtosecond light can go across about 300 atoms. Therefore the parts in a very fast computer must be small and very close together!

If you think of a transistor using impurities, and the impurities run around one in a million typically, then you would probably not believe a transistor with one impure atom, but maybe, if you lower the temperature to reduce background noise, 1,000 impurities is within your imagination—thus making the solid-state device of at least around 1,000 atoms on a side. With interconnections at times running at least ten device distances, you see why you feel getting below 100,000 atoms’ distance between some interconnected devices is really pushing things (3 picoseconds).

Then there is heat dissipation. While there has been talk of thermodynamically reversible computers, so far it has only been talk and published papers, and heat still matters. The more parts per unit area, and the faster the rate of state change, the more the heat generated in a small area, which must be gotten rid of before things melt. To partially compensate we have been going to lower and lower voltages, and are now going to 2 1/2 or 3 volts operating the ic. The possibility that the base of the chip have a diamond layer is currently being examined since diamond is a very good heat conductor, much better than copper. There is now a reasonable possibility for a similar, possibly less expensive than diamond crystal structure with very good heat conduction properties.

To speed up computers we have gone to two, four, and even more arithmetic units in the same computer, and have also devised pipelines and cache memories. These are all small steps towards highly parallel computers.

Thus you see the handwriting on the wall for the single-processor machine—we are approaching saturation. Hence the fascination with highly parallel machines. Unfortunately there is as yet no single general structure for them, but rather many, many competing designs, all generally requiring different strategies to exploit their potential speeds and having different advantages and disadvantages. It is not likely a single design will emerge for a standard parallel computer architecture, hence there will be trouble and dissipation in efforts to pursue the various promising directions.

Here in the history of the growth of computers you see a realization of the S-type growth curve: the very slow start, the rapid rise, the long stretch of almost linear growth in the rate, and then the facing of the inevitable saturation.

Again, to reduce things to human size. When I first got digital computing really going inside Bell Telephone Laboratories I began by renting computers outside for so many hours the head of the mathematics department figured out for himself it would be cheaper to get me one inside—a deliberate plot on my part to avoid arguing with him, as I thought it useless and would only produce more resistance on his part to digital computers. Once a boss says “no!” it is very hard to get a different decision, so don’t let them say “no!” to a proposal. I found in my early years I was doubling the number of computations per year about every 15 months. Some years later I was reduced to doubling the amount about every 18 months. The department head kept telling me I could not go on at that rate forever, and my polite reply was always, “You are right, of course, but you just watch me double the amount of computing every 18–20 months!” Because the machines available kept up the corresponding rate, this enabled me, and my successors, for many years to double the amount of computing done. We lived on the almost straight line part of the S curve all those years.

However, let me observe in all honesty to the department head, it was remarks by him which made me realize it was not the number of operations done that mattered, it was, as it were, the number of micro-Nobel prizes I computed that mattered. Thus the motto of a book I published in 1961:

The purpose of computing is insight, not numbers.

A good friend of mine revised it to:

The purpose of computing numbers is not yet in sight.

It is necessary now to turn to some of the details of how for many years computers were constructed. The smallest parts we will examine are two-state devices for storing bits of information, and for gates which either let a signal go through or block it. Both are binary devices, and in the current state of knowledge they provide the easiest, fastest methods of computing we know.

From such parts we construct combinations which enable us to store longer arrays of bits; these arrays are often called number registers. The logical control is just a combination of storage units including gates. We build an adder out of such devices, as well as every larger unit of a computer.

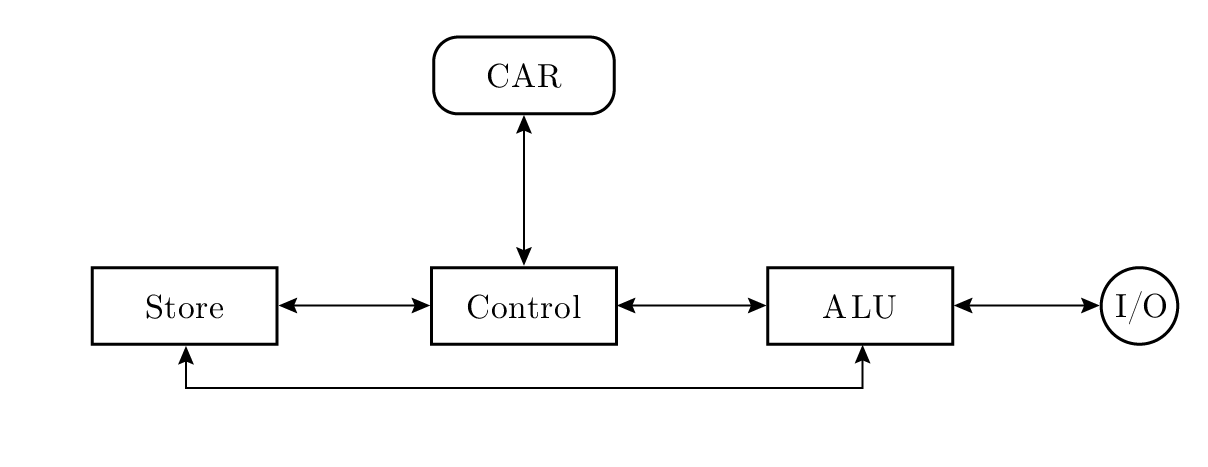

Going to the still larger units we have the machine consisting of: (1) a storage device, (2) a central control, (3) an alu unit, meaning arithmetic and logic unit. There is in the central control a single register, which we will call the Current Address Register (car). It holds the address of where the next instruction is to be found, Figure 3.1.

The cycle of the computer is:

- Get the address of the next instruction from the car.

- Go to that address in storage and get that instruction.

- Decode and obey that instruction.

- Add 1 to the car address, and start in again.

We see the machine does not know where it has been, nor where it is going to go; it has at best only a myopic view of simply repeating the same cycle endlessly. Below this level the individual gates and two-way storage devices do not know any meaning—they simply react to what they are supposed to do. They too have no global knowledge of what is going on, nor any meaning to attach to any bit, whether storage or gating.

There are some instructions which, depending on some state of the machine, put the address of their instruction into the car (and 1 is not added in such cases), and then the machine, in starting its cycle, simply finds an address which is not the immediate successor in storage of the previous instruction, but the location inserted into the car.

I am reviewing this so you will be clear the machine processes bits of information according other bits, and as far as the machine is concerned there is no meaning to anything which happens—it is we who attach meaning to the bits. The machine is a “machine” in the classical sense; it does what it does and nothing else (unless it malfunctions). There are, of course, real-time interrupts, and other ways new bits get into the machine, but to the machine they are only bits.

How different are we in practice from the machines? We would all like to think we are different from machines, but are we essentially? It is a touchy point for most people, and the emotional and religious aspects tend to dominate most arguments. We will return to this point in Chapters 6–8 on ai when we have more background to discuss it reasonably.

4 History of computers—software

As I indicated in the last chapter, in the early days of computing the control part was all done by hand. The slow desk computers were at first controlled by hand, for example multiplication was done by repeated additions, with column shifting after each digit of the multiplier. Division was similarly done by repeated subtractions. In time electric motors were applied, both for power and later for more automatic control over multiplication and division. The punch card machines were controlled by plug board wiring to tell the machine where to find the information, what to do with it, and where to put the answers on the cards (or on the printed sheet of a tabulator), but some of the control might also come from the cards themselves, typically X and Y punches (other digits could, at times, control what happened). A plug board was specially wired for each job to be done, and in an accounting office the wired boards were usually saved and used again each week, or month, as they were needed in the cycle of accounting.

When we came to the relay machines, after

An interesting story about soap is a copy of the program, call it program A, was both loaded into the machine as a program and processed as data. The output of this was program B. Then B was loaded into the 650 and A was run as data to produce a new B program. The difference between the two running times to produce program B indicated how much the optimization of the soap program (by soap itself) produced. An early example of self-compiling, as it were.

In the beginning we programmed in absolute binary, meaning we wrote the actual address where things were in binary, and wrote the instruction part also in binary! There were two trends to escape this: octal, where you simply group the binary digits in sets of three, and hexadecimal, where you take four digits at a time, and had to use A, B, C, D, E, and F for the representation of other numbers beyond 9 (and you had, of course, to learn the multiplication and addition tables to 15).

If, in fixing up an error, you wanted to insert some omitted instructions, then you took the immediately preceding instruction and replaced it by a transfer to some empty space. There you put in the instruction you just wrote over, added the instructions you wanted to insert, followed by a transfer back to the main program. Thus the program soon became a sequence of jumps of the control to strange places. When, as almost always happens, there were errors in the corrections, you then used the same trick again, using some other available space. As a result the control path of the program through storage soon took on the appearance of a can of spaghetti. Why not simply insert them in the run of instructions? Because then you would have to go over the entire program and change all the addresses which referred to any of the moved instructions! Anything but that!

We very soon got the idea of reusable software, as it is now called. Indeed, Babbage had the idea. We wrote mathematical libraries to reuse blocks of code. But an absolute address library meant each time the library routine was used it had to occupy the same locations in storage. When the complete library became too large we had to go to relocatable programs. The necessary programming tricks were in the von Neumann reports, which were never formally published.

Someone got the idea a short piece of program could be written which would read in the symbolic names of the operations (like add) and translate them at input time to the binary representations used inside the machine (say 01100101). This was soon followed by the idea of using symbolic addresses—a real heresy for the old time programmers. You do not now see much of the old heroic absolute programming (unless you fool with a handheld programmable computer and try to get it to do more than the designer and builder ever intended).

I once spent a full year, with the help of a lady programmer from Bell Telephone Laboratories, on one big problem coding in absolute binary for the ibm 701, which used all the 32K registers then available. After that experience I vowed never again would I ask anyone to do such labor. Having heard about a symbolic system from Poughkeepsie, ibm, I asked her to send for it and to use it on the next problem, which she did. As I expected, she reported it was much easier. So we told everyone about the new method, meaning about 100 people, who were also eating at the ibm cafeteria near where the machine was. About half were ibm people and half were, like us, outsiders renting time. To my knowledge only one person—yes, only one—of all the 100 showed any interest!

Finally, a more complete, and more useful, Symbolic Assembly Program (sap) was devised—after more years than you are apt to believe, during which time most programmers continued their heroic absolute binary programming. At the time sap first appeared I would guess about 1% of the older programmers were interested in it—using sap was “sissy stuff,” and a real programmer would not stoop to wasting machine capacity to do the assembly. Yes! Programmers wanted no part of it, though when pressed they had to admit their old methods used more machine time in locating and fixing up errors than the sap program ever used. One of the main complaints was when using a symbolic system you didn’t know where anything was in storage—though in the early days we supplied a mapping of symbolic to actual storage, and believe it or not they later lovingly pored over such sheets rather than realize they did not need to know that information if they stuck to operating within the system—no! When correcting errors they preferred to do it in absolute binary addresses.

Physically, the management of the ibm 701, at ibm Headquarters in New York City where we rented time, was terrible. It was a sheer waste of machine time (at that time $300 per hour was a lot) as well as human time. As a result I refused later to order a big machine until I had figured out how to have a monitor system—which someone else finally built for our first ibm 709, and later modified it for the ibm 7096.

Again, monitors, often called “the system” these days, like all the earlier steps I have mentioned, should be obvious to anyone who is involved in using the machines from day to day; but most users seem too busy to think or observe how bad things are and how much the computer could do to make things significantly easier and cheaper. To see the obvious it often takes an outsider, or else someone like me who is thoughtful and wonders what he is doing and why it is all necessary. Even when told, the old timers will persist in the ways they learned, probably out of pride for their past and an unwillingness to admit there are better ways than those they were using for so long.

One way of describing what happened in the history of software is that we were slowly going from absolute to virtual machines. First, we got rid of the actual code instructions, then the actual addresses, then in fortran the necessity of learning a lot of the insides of these complicated machines and how they worked. We were buffering the user from the machine itself. Fairly early at Bell Telephone Laboratories we built some devices to make the tape units virtual, machine independent. When, and only when, you have a totally virtual machine will you have the ability to transfer software from one machine to another without almost endless trouble and errors.

fortran was successful far beyond anyone’s expectations because of the psychological fact it was just what its name implied—formula translation of the things one had always done in school; it did not require learning a new set of ways of thinking.

Algol, around 1958–1960, was backed by many worldwide computer organizations, including the acm. It was an attempt by the theoreticians to greatly improve fortran. But being logicians, they produced a logical, not a humane, psychological language, and of course, as you know, it failed in the long run. It was, among other things, stated in a Boolean logical form which is not comprehensible to mere mortals (and often not even to the logicians themselves!). Many other logically designed languages which were supposed to replace the pedestrian fortran have come and gone, while fortran (somewhat modified to be sure) remains a widely used language, indicating clearly the power of psychologically designed languages over logically designed languages.

This was the beginning of a great hope for special languages, pols they were called, meaning problem-oriented languages. There is some merit in this idea, but the great enthusiasm faded because too many problems involved more than one special field, and the languages were usually incompatible. Furthermore, in the long run, they were too costly in the learning phase for humans to master all of the various ones they might need.

- Easy to learn.

- Easy to use.

- Easy to debug (find and correct errors).

- Easy to use subroutines.

The last is something which need not bother you, as in those days we made a distinction between “open” and “closed” subroutines, which is hard to explain now!

I made the two-address fixed point decimal machine look like a three-address floating point machine—that was my goal—A op. B = C. I used the ten decimal digits of the machine (it was a decimal machine so far as the user was concerned) in this form:

| A address | Op. | B address | C address |

| xxx | x | xxx | xxx |

The software system I built was placed in the storage registers 1,000 to 1,999. Thus any program in the synthetic language, having only three decimal digits, could only refer to addresses 000 to 999, and could not refer to, and alter, any register in the software and thus ruin it: designed-in security protection of the software system from the user.

The human animal is not reliable, as I keep insisting, so low redundancy means lots of undetected errors, while high redundancy tends to catch the errors. The spoken language goes over an acoustic channel with all its noise and must be caught on the fly as it is spoken; the written language is printed, and you can pause, backscan, and do other things to uncover the author’s meaning. Notice in English more often different words have the same sounds (“there” and “their,” for example) than words have the same spelling but different sounds (“record” as a noun or a verb, and “tear” as in tear in the eye vs. tear in a dress). Thus you should judge a language by how well it fits the human animal as it is—and remember I include how they are trained in school, or else you must be prepared to do a lot of training to handle the new type of language you are going to use. That a language is easy for the computer expert does not mean it is necessarily easy for the non-expert, and it is likely non-experts will do the bulk of the programming (coding, if you wish) in the near future.

What is wanted in the long run, of course, is that the man with the problem does the actual writing of the code with no human interface, as we all too often have these days, between the person who knows the problem and the person who knows the programming language. This date is unfortunately too far off to do much good immediately, but I would think by the year 2020 it would be fairly universal practice for the expert in the field of application to do the actual program preparation rather than have experts in computers (and ignorant of the field of application) do the program preparation.

Until we better understand languages of communication involving humans as they are (or can be easily trained), it is unlikely many of our software problems will vanish.

You read constantly about “engineering the production of software,” both for the efficiency of production and for the reliability of the product. But you do not expect novelists to “engineer the production of novels.” The question arises: “Is programming closer to novel writing than it is to classical engineering?” I suggest yes! Given the problem of getting a man into outer space, both the Russians and the Americans did it pretty much the same way, all things considered, and allowing for some espionage. They were both limited by the same firm laws of physics. But give two novelists the problem of writing on “the greatness and misery of man,” and you will probably get two very different novels (without saying just how to measure this). Give the same complex problem to two modern programmers and you will, I claim, get two rather different programs. Hence my belief that current programming practice is closer to novel writing than it is to engineering. The novelists are bound only by their imaginations, which is somewhat as the programmers are when they are writing software. Both activities have a large creative component, and while you would like to make programming resemble engineering, it will take a lot of time to get there—and maybe you really, in the long run, do not want to do it! Maybe it just sounds good. You will have to think about it many times in the coming years; you might as well start now and discount propaganda you hear, as well as all the wishful thinking which goes on in the area! The software of the utility programs of computers has been done often enough, and is so limited in scope, so it might reasonably be expected to become “engineered,” but the general software preparation is not likely to be under “engineering control” for many, many years.

There are many proposals on how to improve the productivity of the individual programmer, as well as groups of programmers. I have already mentioned top-down and bottom-up; there are others, such as head programmer, lead programmer, proving the program is correct in a mathematical sense, and the waterfall model of programming, to name but a few. While each has some merit I have faith in only one, which is almost never mentioned—think before you write the program, it might be called. Before you start, think carefully about the whole thing, including what will be your acceptance test that it is right, as well as how later field maintenance will be done. Getting it right the first time is much better than fixing it up later!